Aimee van Wynsberghe is an Assistant Professor of Ethics of Technology at the University of Twente. During her speech, she raised many interesting points that should be considered when developing robots and AI technology.

Ethics of robots

'Ethics is about living the good life, about what that means and how to achieve it. It´s about defining what is right and wrong,' Van Wynsberghe said about her field of expertise. 'There are many ethical issues related to robots. There are ethics of robots themselves, of the code programmed into the robot. Can a robot be a moral agent like a human and make life and death decisions? Is a robot responsible for its actions? If it isn´t, who is - the designer, the manufacturer, the owner?'

'Then there are ethics of people making robots and of people using them,' continued Wynsberghe. 'How should we treat robots? Can we kick them or damage them? What if they are part of the family, part of the community. Should they have rights? These are all open questions.'

Self-driving cars

If AI allows robots to function with a greater level of autonomy, they will need to make decisions that are often too difficult even for humans. So what should these AI systems do? You might think this is a question for a distant future, but you have surely heard about self-driving cars, technology that already exists. How should these autonomous cars function if faced with decisions?

'If it has to choose, should the car always kill as few people as possible? What if it has to choose between killing a stranger and killing your partner, for instance? Should it kill the least important person or just not kill anybody, roll on the side and possibly kill you? Should we allow the car to learn from its mistakes?' wondered Wynsberghe.

Falling in love with a robot

All of these question marks show that it might be extremely difficult, if not impossible, to teach robots right from wrong. 'We´re not sure what are the criteria that make a human a moral agent, so how can we program it into a robot?' said Wynsberghe. 'And how do we test the robots? Do we put them into a lab and see who it chooses to kill?'

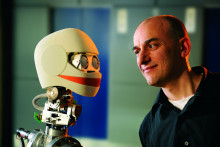

Even if we manage to find answers and create robots that are autonomous moral agents, more ethical issues arise. Once the robots become part of our moral community, how should they be treated? What happens when people fall in love with AI systems? 'People already react to current technology, such as smartphones and laptops, because they help them, people appreciate them. What happens when you have a robot that acts like a person?' pointed out Wynsberghe.

Technology as part of good life

All of the above reminds us that ethics of robots are just as important as their design and that the related ethical issues should be constantly addressed while developing new technology. 'It´s not about building the big 'Terminator' that might eventually come and destroy us all. It´s about all the small steps in between,' concluded Wynsberghe. 'Ethics allows us to define terms like consciousness or free will and help us develop future technology that contributes to the good life.'